We’ve run over 100 technical audits this year.

Through this we’ve gained deep insights into how technical structure impacts a website’s performance in search.

This article will highlight the most common technical SEO issues we encounter and which have the largest impact on organic traffic when corrected.

1. Mismanaged 404 errors

This happens quite a bit on eCommerce sites. When a product is removed or expires, it’s easily forgotten and the page “404s”.

Although 404 errors can erode your crawl budget, they won’t necessarily kill your SEO. Google understands that sometimes you HAVE to delete pages on your site.

However, 404 pages can be a problem when they:

- Are getting traffic (internally and from organic search)

- Have external links pointing to them

- Have internal links pointing to them

- Have a large number of them on a larger website

- Are shared on social media / around the web

The best practice is to set up a 301 redirect from the deleted page into another relevant page on your site. This will preserve the SEO equity and make sure users can seamlessly navigate.

How to find these errors

- Run a full website crawl (SiteBulb, DeepCrawl or Screaming Frog) to find all 404 pages

- Check Google Search Console reporting (Crawl > Crawl Errors)

How to fix these errors

- Analyze the list of “404” errors on your website

- Crosscheck those URLs with Google Analytics to understand which pages were getting traffic

- Crosscheck those URLs with Google Search Console to understand which pages had inbound links from outside websites

- For those pages of value, identify an existing page on your website that is most relevant to the deleted page

- Setup “server-side” 301 redirects from the 404 page into the existing page you’ve identified – If you are going to use a 4XX page – make sure that page is actually functional so it doesn’t impact user experience

2. Website migrations issues

When launching a new website, design changes or new pages, there are a number of technical aspects that should be addressed ahead of time.

Common errors we see:

- Use of 302 (temporary redirect) instead of 301 (permanent) redirects. While Google recently stated that 302 redirects pass SEO equity, we hedge based on internal data that shows 301 redirects are the better option

- Improper setup of HTTPS on a website. Specifically, not redirecting the HTTP version of the site into HTTPS which can cause issues with duplicate pages

- Not carrying over 301 redirects from the previous site to new site. This often happens if you’re using a plugin for 301 redirects – 301 redirects should always be setup through a website’s cPanel

- Leaving legacy tags on the site from the staging domain. For example, canonical tags, NOINDEX tags, etc. that prevent pages on your staging domain from being indexed

- Leaving staging domains indexed. The opposite of the previous item, when you do NOT place the proper tags on staging domains (or subdomains) to NOINDEX them from SERPs (either with NOINDEX tags or blocking crawl via Robots.txt file)

- Creating “redirect chains” when cleaning up legacy websites. In other words, not properly identifying pages that were previously redirected and moving forward with a new set of redirects

- Not saving the force www or non www of the site in the .htaccess file. This causes 2 (or more) instances of your website to be indexed in Google, causing issues with duplicate pages being indexed

How to find these errors

- Run a full website crawl (SiteBulb, DeepCrawl or Screaming Frog) to get the needed data inputs

How to fix these errors

- Triple check to make sure your 301 redirects migrated properly

- Test your 301 and 302 redirects to make sure they go to the right place on the first step

- Check canonical tags in the same way and ensure you have the right canonical tags in place

- Given a choice between canonicalizing a page and 301 redirecting a page – a 301 redirect is a safer, stronger option

- Check your code to ensure you remove NOINDEX tags (if used on staging domain). Don’t just uncheck the options the plugins. Your developer may have hardcoded NOINDEX into the theme header – Appearance > Themes > Editor > header.php

- Update your robots.txt file

- Check and update your .htaccess file

3. Website speed

Google has confirmed that website speed is a ranking factor – they expect pages to load in 2 seconds or less. More importantly, website visitors won’t wait around for a page to load.

In other words, slow websites don’t make money.

Optimizing for website speed will require the help of a developer, as the most common issues slowing down websites are:

- Large, unoptimized images

- Poorly written (bloated) website code

- Too many plugins

- Heavy Javascript and CSS

How to find these errors

- Check your website in Google PageSpeed Insights, Uptime.com, GTMetrix or Pingdom

How to fix these errors

- Hire a developer with experience in this area (find out how FTF can help)

- Make sure you have a staging domain setup so website performance isn’t hindered

- Where possible make sure you have upgraded PHP to PHP7 where you use WordPress or a PHP CMS. This will have a big impact on speed.

4. Not optimizing the mobile User Experience (UX)

Google’s index is officially mobile first, which means that the algorithm is looking at the mobile version of your site first when ranking for queries.

With that being said, don’t exclude the desktop experience (UX) or simplify the mobile experience significantly compared to the desktop.

Google has said it wants both experiences to be the same. Google has also stated that those using responsive or dynamically served pages should not be affected when the change comes.

How to find these errors

- Use Google’s Mobile-Friendly Test to check if Google sees your site as mobile-friendly

- Check to see if “smartphone Googlebot” is crawling your site – it hasn’t rolled out everywhere yet

- Does your website respond to different devices? If your site doesn’t work on a mobile device, now is the time to get that fixed

- Got unusable content on your site? Check to see if it loads or if you get error messages. Make sure you fully test all your site pages on mobile

How to fix these errors

- Understand the impact of mobile on your server load

- Focus on building your pages from a mobile-first perspective. Google likes Responsive sites and is their preferred option for delivering mobile sites. If you currently run a standalone subdirectory, m.yourdomain.com look at potential impact of increased crawling on your server

- If you need to, consider a template update to make the theme responsive. Just using a plugin might not do what you need or cause other issues. Find a developer who can scratch build responsive themes

- Focus on multiple mobile breakpoints, not just your brand new iPhone X. 320px wide (iPhone 5 and SE) is still super important

- Test across iPhone and Android

- If you have content that needs “fixing” – flash or other proprietary systems that don’t work on your mobile journey – consider moving to HTML5 which will render on mobile – Google web designer will allow you to reproduce FLASH files in HTML

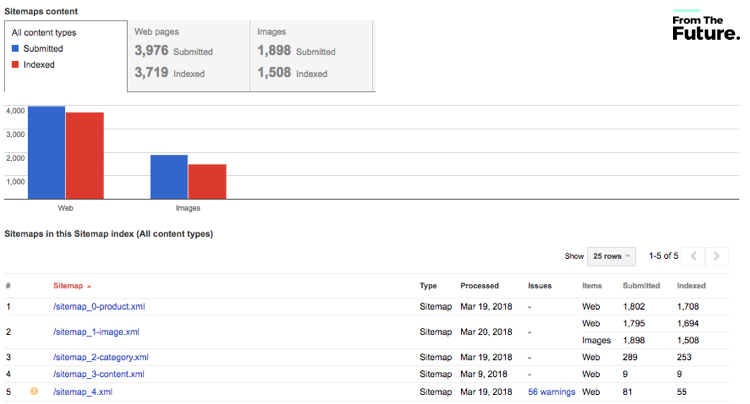

5. XML Sitemap issues

An XML Sitemap lists out URLs on your site that you want to be crawled and indexed by search engines. You’re allowed to include information about when a page:

- Was last updated

- How often it changes

- How important it is in relation to other URLs in the site (i.e., priority)

While Google admittedly ignores a lot of this information, it’s still important to optimize properly, particularly on large websites with complicated architectures.

Sitemaps are particularly beneficial on websites where:

- Some areas of the website are not available through the browsable interface

- Webmasters use rich Ajax, Silverlight or Flash content that is not normally processed by search engines

- The site is very large, and there is a chance for the web crawlers to overlook some of the new or recently updated content

- When websites have a huge number of pages that are isolated or not well linked together

- Misused “crawl budget” on unimportant pages. If this is the case, you’ll want to block crawl / NOINDEX

How to find these errors

- Make sure you have submitted your sitemap to your GSC

- Also remember to use Bing webmaster tools to submit your sitemap

- Check your sitemap for errors Crawl > Sitemaps > Sitemap Errors

- Check the log files to see when your sitemap was last accessed by bots

How to fix these errors

- Make sure your XML sitemap is connected to your Google Search Console

- Run a server log analysis to understand how often Google is crawling your sitemap. There are lots of other things we will cover using our server log files later on

- Google will show you the issues and examples of what it sees as an error so you can correct

- If you are using a plugin for sitemap generation, make sure it is up to date and that the file it generates works by validating it

- If you don’t want to use Excel to check your server logs – you can use a server log analytics tool such as Logz.io, Greylog, SEOlyzer (great for WP sites) or Loggly to see how your XML sitemap is being used

6. URL Structure issues

As your website grows, it’s easy to lose track of URL structures and hierarchies. Poor structures make it difficult for both users and bots navigate, which will negatively impact your rankings.

- Issues with website structure and hierarchy

- Not using proper folder and subfolder structure

- URLs with special characters, capital letters or not useful to humans

How to find these errors

- 404 errors, 302 redirects, issues with your XML sitemap are all signs of a site that needs its structure revisited

- Run a full website crawl (SiteBulb, DeepCrawl or ScreamingFrog) and manually review for quality issues

- Check Google Search Console reporting (Crawl > Crawl Errors)

- User testing – ask people to find content on your site or make a test purchase – use a UX testing service to record their experiences

How to fix these errors

- Plan your site hierarchy – we always recommend parent-child folder structures

- Make sure all content is placed in its correct folder or subfolder

- Make sure your URL paths are easy to read and make sense

- Remove or consolidate any content that looks to rank for the same keyword

- Try to limit the number of subfolders / directories to no more than three levels

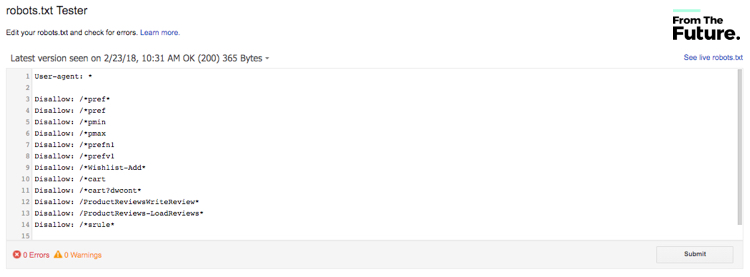

7. Issues with robots.txt file

A Robots.txt file controls how search engines access your website. It’s a commonly misunderstood file that can crush your website’s indexation if misused.

Most problems with the robots.txt tend to arise from not changing it when you move from your development environment to live or miscoding the syntax.

How to find these errors

- Check your site stats – i.e. Google Analytics for big drops in traffic

- Check Google Search Console reporting (Crawl > robots.txt tester)

How to fix these errors

- Check Google Search Console reporting (Crawl > robots.txt tester) This will validate your file

- Check to make sure the pages/folders you DON’T want to be crawled are included in your robots.txt file

- Make sure you are not blocking any important directories (JS, CSS, 404, etc.)

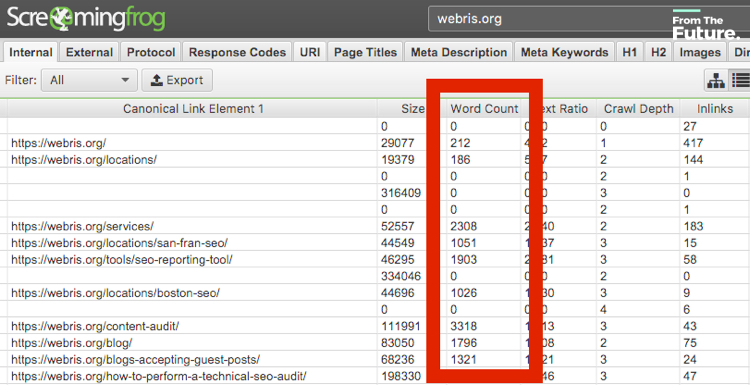

8. Too much thin content

It’s no longer a good idea to crank out pages for “SEO” purposes. Google wants to rank pages that are deep, informative and provide value.

Having too much “thin” (i.e. less than 500 words, no media, lack of purpose) can negatively impact your SEO. Some of the reasons:

- Content that doesn’t resonate with your target audience will kill conversion and engagement rates

Google’s algorithm looks heavily at content quality, trust and relevancy (aka having crap content can hurt rankings) - Too much low quality content can decrease search engine crawl rate, indexation rate and ultimately, traffic

- Rather than generating content around each keyword, collect the content into common themes and write much more detailed, useful content.

How to find these errors

- Run a crawl to find pages with word count less than 500

- Check your GSC for manual messages from Google (GSC > Messages)

- Not ranking for the keywords you are writing content for or suddenly lose rankings

- Check your page bounce rates and user dwell time – pages with high bounce rates

How to fix these errors

- Cluster keywords into themes so rather than writing one keyword per page you can place 5 or 6 in the same piece of content and expand it.

- Work on pages that try and keep the user engaged with a variety of content – consider video or audio, infographics or images – if you don’t have these skills find them on Upwork, Fiverr, or PPH.

- Think about your user first – what do they want? Create content around their needs.

9. Too much irrelevant content

In addition to “thin” pages, you want to make sure your content is “relevant”. Irrelevant pages that don’t help the user, can also detract from the good stuff you have on site.

This is particularly important if you have a small, less authoritative website. Google crawls smaller website less than more authoritative ones. We want to make sure we’re only serving Google our best content to increase that trust, authority and crawl budget.

Some common instances

- Creating boring pages with low engagement

- Letting search engines crawl of “non-SEO” pages

How to find these errors

- Review at your content strategy. Focus on creating better pages as opposed to more

- Check your Google crawl stats and see what pages are being crawled and indexed

How to fix these errors

- Remove quotas in your content planning. Add content that adds value rather than the six blogs posts you NEED to post because that is what your plan says

- Add pages to your Robots.txt file that you would rather not see Google rank. In this way, you are focussing Google on the good stuff

10. Misuse of canonical tags

A canonical tag (aka “rel=canonical”) is a piece of HTML that helps search engines decipher duplicate pages. If you have two pages that are the same (or similar), you can use this tag to tell search engines which page you want to show in search results.

If your website runs on a CMS like WordPress or Shopify, you can easily set canonical tags using a plugin (we like Yoast).

We often find websites that misuse canonical tags, in a number of ways:

- Canonical tags pointing to the wrong pages (i.e.pages not relevant to the current page)

- Canonical tags pointing to 404 pages (i.e., pages that no longer exist)

- Missing a canonical tag altogether

- eCommerce and “faceted navigation“

- When a CMS create two versions of a page

This is significant, as you’re telling search engines to focus on the wrong pages on your website. This can cause massive indexation and ranking issues. The good news is, it’s an easy fix.

How to find these errors

- Run a site crawl in DeepCrawl

- Compare “Canonical link element” to the root URL to see which pages are using canonical tags to point to a different page

How to fix these errors

- Review pages to determine if canonical tags are pointing to the wrong page

- Also, you will want to run a content audit to understand pages that are similar and need a canonical tag

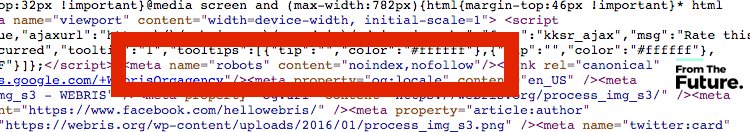

11. Misuse of robots tags

As well as your robots.txt file, there are also robots tags that can be used in your header code. We see a lot of potential issues with this used at file level and on individual pages. In some cases, we have seen multiple robots tags on the same page.

Google will struggle with this and it can prevent a good, optimized page from ranking.

How to find these errors

- Check your source code in a browser to see if robots tag added more than once

- Check the syntax and don’t confuse the nofollow link attribute with the nofollow robots tag

How to fix these errors

- Decide how you will manage/control robots activity. Yoast SEO gives you some pretty good abilities to manage robot tags at a page level

- Make sure you use one plugin to manage robot activity

- Make sure you amend any file templates where robot tags have been added manually Appearance > Themes >Editor > header.php

- You can add Nofollow directives to the robots.txt file instead of going file by file

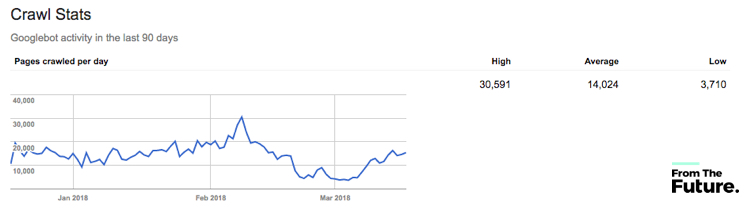

12. Mismanaged crawl budget

It’s a challenge for Google to crawl all the content on the internet. In order to save time, the Googlebot has a budget it allocates to sites depending on a number of factors.

A more authoritative site will have a bigger crawl budget (it crawls and indexes more content) than a lower authority site, which will have fewer pages and fewer visits. Google itself defines this as “Prioritizing what to crawl, when and how much resource the server hosting the site can allocate to crawling.”

How to find these errors

- Find out what your crawl stats are in GSC Search Console > Select your domain > Crawl > Crawl Stats

- Use your server logs to find out what the Googlebot is spending the most time doing on your site – this will then tell you if it is on the right pages – use a tool such as botify if spreadsheets make you nauseous.

How to fix these errors

- Reduce the errors on your site

- Block pages you don’t really want Google crawling

- Reduce redirect chains by finding all those links that link to a page that itself is redirected and update all links to the new final page

- Fixing some of the other issues we have discussed above will go a long way to help increase your crawl budget or focus your crawl budget on the right content

- For ecommerce specifically, not blocking parameter tags that are used for faceted navigation without changing the actual content on a page

Check out our detailed guide on how to improve crawl budget

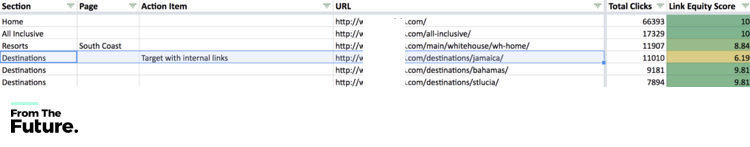

13. Not leveraging internal links to pass equity

Internal links help to distribute “equity” across a website. Lots of sites, especially those with thin or irrelevant content tend to have a lower amount of cross-linking within the site content.

Cross-linking articles and posts help Google and your site traffic moves around your website. The added value of this from a technical SEO perspective is that you can pass equity across the website. This helps with improved keyword ranking.

How to find these errors

- For pages you are trying to rank, look at what internal pages link to them. This can be done in Google Analytics – do they have any internal inbound links?

- Run an inlinks crawl using Screaming Frog

- You will know yourself if you actively link to other pages on your site

- Are you adding internal nofollow links via a plugin that is applying this to all links? Check the link code in a browser by inspecting or viewing the source code

- Use the same small number of anchor tags and links on your site

How to fix these errors

- For pages you are trying to rank, find existing site content (pages and posts) that can link to the page you want to improve ranking for, and add internal links

- Use the crawl data from Screaming Frog to identify opportunities for more internal linking

- Don’t overcook the number of links and the keywords used to link – make it natural and across the board

- Check your nofollow link rules in any plugin you are using to manage linking

14. Errors with page “on page” markup

Title tags and metadata are some of the most abused code on websites and have been since Google has been crawling websites. With this in mind, site owners have pretty much forgotten about the relevance and importance of title tags and metadata.

How to find these errors

- Use Yoast to see how well your site titles and metadata work – red and amber mean more work can be done

- Keyword stuffing the keyword tag – are you using keywords in your keyword tag that do not appear in the page content?

- Use SEMRush and Screaming Frog to identify duplicate title tags or missing title tags

How to fix these errors

- Use Yoast to see how to rework the titles and metadata , especially the meta description, which has undergone a bit of a rebirth thanks to Google increase of character count. Meta description data used to be set to 255 characters, but now the average length it is displaying is over 300 characters – take advantage of this increase

- Use SEMrush to identify and fix any missing or duplicate page title tags – make sure every page has a unique title tag and page meta description

- Remove any non-specific keywords from the meta keyword tag

Bonus: Structured data

With Google becoming more sophisticated and offering webmasters the ability to add different markup data to display in different places within their sites, it is easy to see how schema markup can get messy. From:

- Map data

- Review data

- Rich snippet data

- Product data

- Book Reviews

It is easy to see how this can break a website or just get overlooked as the focus is elsewhere. The correct schema markup data can in effect allow you to dominate the onscreen element of a SERP.

How to find these errors

- Use your GSC to identify what schema is being picked up by Google and where the errors are. Search Appearance > Structured Data – If no snippets are found this means the code is wrong or you need to add schema code

- Use your GSC to identify what schema is being picked up by Google and where the errors are. Search Appearance > Rich Cards – If no Rich Cards are found this means the code is wrong or you need to add schema code

- Test your schema with Google’s own markup helper

How to fix these errors

- Identify what schema you want to use on your website, then find a relevant plugin to assist. All in One Schema plugin or RichSnippets Plugin can be used to manage and generate a schema.

- Once the code is built, test with Google Markup helper below

- If you aren’t using WordPress, you can get a developer to build this code for you. Google prefers the JSON-LD format so ensure your developer knows this format

- Test your schema with Google’s own markup helper

Wrapping it up

As search engine algorithms continue to advance, so does the need for technical SEO.

If your website needs an audit, consulting or improvements, contact us directly more help.